With the explosion of generative AI into almost every aspect of our lives – and certainly in education – the concept of ‘digital literacy’ has been much discussed. Indeed, one of Queen Mary’s graduate attributes is to ‘be AI and digitally literate’. In this article, the Digital Education Studio’s Dr Jo Elliott explores the concept of digital literacy and how we can help our students develop it.

What is (critical) digital literacy?

At its simplest, digital literacy is the ability to ‘use and evaluate’ digital tools (see Pangrazio, 2016, pp. 163-164 for a summary of different conceptualisations and definitions). However, in an age where technology is increasingly being used for surveillance and inform, if not make, decisions about employment, policing and loan eligibility, I argue that we need to go beyond that definition and help our students develop critical digital literacy. This adds an understanding of the systems, sociocultural contexts and power structures related to the production and operation of technologies, to the technical skills required to select, evaluate and appropriately use digital tools. It may also include the ability to imagine, shape or create alternative tools, systems or practices (Pangrazio, 2016).

Critical digital literacy and AI

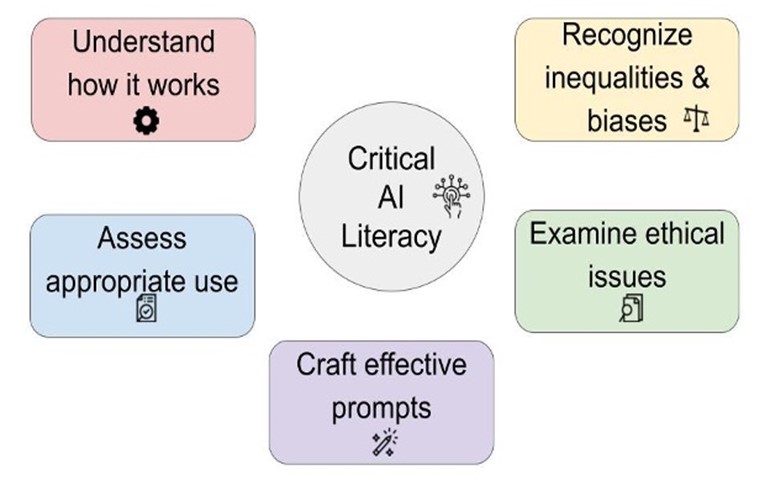

When it comes to applying a critical digital literacy lens to AI, Maha Bali’s framework for critical AI literacy is an excellent place to start, highlighting five key capabilities:

- Understanding how gen AI works

- Recognising inequalities and biases within gen AI

- Examining ethical issues in gen AI

- Crafting effective prompts

- Assessing appropriate uses of gen AI

Image credit: Bali, 2024, LES Blogs

Developing critical digital literacy

So how can we help students develop their critical digital literacy? Here are some strategies or activities you could incorporate into your teaching:

- List tools or uses

Collaboratively brainstorm lists of digital tools or uses of particular digital technologies relevant to your discipline, either as students or practitioners. Discuss what these tools might be used for, their strengths and weaknesses, and how to decide whether a particular tool or platform is right for a task. - Review the terms of use

How often do we take the time to read the fine print when signing up for a new platform? Ask your students to find and read the terms of use for a platform or tool, and then discuss them together – what have we agreed to, how is our data being used and for what purposes, how it is protected, is there anything that surprised or concerned them? OpenAI’s (ChatGPT) terms of use can be a good place to start. - Compare and critique gen AI output

Ask your students to use a gen AI platform (e.g. ChatGPT, Bard or Google Gemini) to answer questions related to a topic you are exploring together, and then work in small groups to compare and critique the different responses. They might consider any patterns, similarities or inconsistencies they observe; the impact of different AI prompts; and how confident they are about the accuracy, reliability or comprehensiveness of the AI responses. - Co-create rules of engagement

Discuss and negotiate an agreed set of guidelines for using particular technologies – either within your module or programme, or more broadly e.g. as doctors, dentists or researchers. Consider what uses are acceptable or unacceptable and in what circumstances, the roles of transparency and privacy, and any professional standards or codes of conduct that might be relevant. - Consider the bigger picture

Ask students to reflect on and discuss some ‘bigger picture’ questions about the use of technologies. For example, how might using particular tools influence how they approach or think about a problem, task or idea? How might using a digital tool as a shortcut might impact their development of important skills – could they do that task successfully themselves if they didn’t have access to the tool? Would they feel comfortable telling relevant others that they had used a particular digital tool and how? Which voices or groups of people might be impacted, excluded or harmed by our use of particular technologies, and which voices or groups might benefit or be amplified? What motives or other ends might particular technologies serve?

You might have noticed that discussion is key to the strategies and activities suggested above. It is important that we create space for, and encourage, our students to discuss, reflect on and ask questions about digital technologies, their use and their impacts. But that doesn’t mean you have to have all of the answers – these are questions and conversations we need to work through together, and often there is no single right answer. Modelling willingness to admit not knowing and openness to exploring these sticky problems together provides another valuable lesson for our students.

Find out more

Maha Bali’s blog ‘Reflecting Allowed’ is packed with insightful reflections on the use of technology in education, alongside practical strategies for teaching and discussing these with students.

JISC Digital Capabilities resources include a Quickguide to developing students’ digital literacies.

Jesse Stommel explores ideas around critical digital pedagogies.

Anna Mills shares insights and resources on critical digital and AI literacies, and teaching writing in an age of AI.

References

Bali, M. (2024). Where are the crescents in AI? LSE Blogs – Higher Education, 26 February 2024. https://blogs.lse.ac.uk/highereducation/2024/02/26/where-are-the-crescents-in-ai/

Pangrazio, L. (2014). Reconceptualising critical digital literacy. Discourse: Studies in the Cultural Politics of Education, 37(2), 163–174. https://doi.org/10.1080/01596306.2014.942836